- Published on

An Introduction to Maximum Likelihood Estimation (MLE)

- Authors

- Name

- Dmitri Eulerov

Maximum Likelihood Estimation is a method of estimating the parameters of a model of an assumed probability distribution given some data. This is done through maximizing a likelihood function under a given model such that the observed data is most probable.

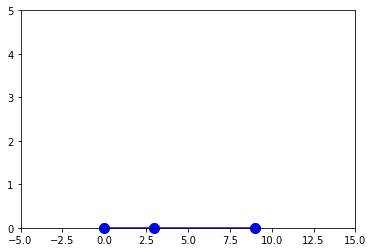

Let's walk through a visual example:

How would we model a probability distribution that best describes the data?

A simple gaussian could be a good fit here. Let's start with that. This is our assumed probability distribution.

Note: In practice, the functional form can vary (Gaussian, exponential, gamma, etc)As we've decided to use a gaussian as our probability distribution, what are the optimal parameters of this gaussian to maximize the likelihood of observing this data?

The answer to the question above can be solved using MLE.

Estimating our optimal parameters

In MLE, we are attempting to estimate a parameter of a given probability distribution . Our objective is to find the value of that maximizes the likelihood function , which is defined as:

where are the observations from the dataset and is the probability density function of the probability distribution with parameter .

The Maximum Likelihood Estimate of is the value that maximizes the likelihood function, so we can solve the optimization problem:

As we are assuming a gaussian distribution, let's recall the gaussian equation:

where is the mean, is the standard deviation, and is the independent variable

In our case , and we are solving to maximize the likelihood of observing our data by discovering the optimal .

Our likelihood function over N samples is then:

We can take the natural log of the likelihood function in order to make the numbers easier to work with and convert products to sums. Tthe cumulative product of probabilities will be a tiny number, and taking the logarithm makes it much more interpretable. Also, since logarithms are monotically increasing, finding the parameters that maximize log likelihood is equivalent to finding the parameters that maximize our likelihood.

Next, we need to find the peak of our probability density function which maximizes the likelihood of our observed samples. We can do this through differentiation. Lets take the derivative of the log-likelihood function with respect to the parameters and and set them equal to zero. (Note: In other distributions, setting the derivative to zero may not always work. Some functions could have multiple local peaks where derivatives are zero; in this case since know the functional form is Gaussian and it has a single peak, this works fine)

Solving for and :

Look familiar? the maximum likelihood estimates of the parameters and are the sample mean and sample variance, respectively!

...But we can just directly calculate the mean and standard deviation of our sample set.. WTF was the point of this?

The idea here is that MLE provides a framework for estimating the parameters of an assumed distribution; our work here has helped us verify that our estimate is in fact a correct one given that we already know how to fit a gaussian. This formalizes the why and how. The method of finding optimal parameters could vary depending on the distribution and may not always have a closed form solution as illustrated in the example above, but I hoped you grasped the context of why this could be useful.

This StackOverflow answer by Aksakal answers it quite well:

"In this case, the average of your sample happens to also be the maximum likelihood estimator. So doing all the work derive the MLE feels like an unnecessary exercise, as you get back to your intuitive estimate of the mean you would have used in the first place. Well, this wasn't "just by chance"; this was specifically chosen to show that MLE estimators often lead to intuitive estimators.

But what if there was no intuitive estimator? For example, suppose you had a sample of iid gamma random variables and you were interested in estimating the shape and the rate parameters. Perhaps you culd try to reason out an estimator from the properties you know about Gamma distributions. But what would be the best way to do it? Using some combination of the estimated mean and variance? Why not use the estimated median instead of the mean? Or the log-mean? These all could be used to create some sort of estimator, but which will be a good one?

As it turns out, MLE theory gives us a great way of succinctly getting an answer to that question: take the values of the parameters that maximize the likelihood of the observed data (which seems pretty intuitive) and use that as your estimate. In fact, we have theory that states that under certain conditions, this will be approximately the best estimator. This is a lot better than trying to figure out a unique estimator for each type of data and then stepping lots of time worrying if it's really the best choice.

In short: while MLE doesn't provide new insight in the case of estimating the mean of normal data, it in general is a very, very useful tool."

Final Thoughts

As always, thanks for reading. I know some of the math looks cryptic and can be quite dense, so it's alright to gloss over it a bit if you're not super comortable with the notation. More importantly, I hope this helped develop some intuition behind what MLE is, and why it might be useful. Stay safe, and happy holidays!